You get the feeling Dr Toby Walsh has never really been a regular university professor. One of Australia's leading artificial intelligence (AI) researchers, he's been outspoken about the dangers of his chosen field, and a vocal critic about the lack of control over AI development. Also, look at the photo he put on his UNSW profile page.But it's been Dr Walsh's criticism of the way AI has crept into the army that's thrust him into the international spotlight. Back in 2015, he was the author of an open letter calling for "a ban on offensive autonomous weapons, also known colloquially as 'killer robots.'" Signed by 1,000 AI researchers, technology experts, and big names—including Stephen Hawking, Elon Musk, Noam Chomsky, and Apple cofounder Steve Wozniak—the letter also landed Dr Walsh at the United Nations, trying to convince the world's leaders they should be worried about AI too.

Two years on from "the letter," we caught up with Dr Walsh at Semi-Permanent to find out if countries actually heeded his warnings, about advancements in deadly AI, and whether Terminator is basically inevitable.VICE: I guess the first time I heard your name was when you penned that open letter about artificial intelligence in the army. Why did you want to write it?

Dr Toby Walsh: I must admit I'm a bit of an accidental activist. I didn't really realise quite how politically involved something like this would become. My colleagues and I were talking about the issue [of AI in the military], and the way the military is starting to develop these sorts of technologies. It was clear to us what the end point was going to be. We wanted to raise a warning flag about it.A lot of very high profile people signed your letter calling for a ban on AI in the military.

I think what was interesting was not that it was signed by some well known people, like Stephen Hawking… but that it was signed by many of my colleagues. I don't think many people realise the AI community is not that big and the letter now has over 20,000 signatures. So that's many of the people—or a good fraction—of the people working now who were very happy to sign that.What's the AI community so worried about? I feel like the military has always played a pretty central role in developing new technology.

Lots of my colleagues said… "We don't want all the good from our technology to be tainted by the military using it for bad." Like any technology, AI is not good or bad. We get to make that choice. It certainly would be a very bad choice today, the technologies the military are developing will be making lots of mistakes. In the future, as it gets more sophisticated, then it's going to be revolutionising the speed and efficacy in which we fight war. So you think AI would fundamentally change how we fight wars?

So you think AI would fundamentally change how we fight wars?

It's been called the third revolution of warfare. The first revolution being the invention of gunpowder by the Chinese, the second revolution being the invention of atomic bombs, and AI will be another step. It will destabilise the planet, which is already pretty destabilised… Just like with the financial markets, where the algorithms have flash crashes, we may end up fighting wars because the machines got into some undesirable feedback loop where they will be operating at such rapid pace we won't be able to step in and intervene.Did anything actually come out of your letter? I know there can be these, sort of, flashes of media interest around open letters and then the interest goes away.

I've been invited now to speak three times at the United Nations… There are still lots of possible obstacles in the way.. The first time I talked to [them] it was clear that the problem was the Chinese. A lot of the problem is now the Russians.I feel like this isn't the first time I've heard that this week.

Russia is now been behaving in a lot more of an aggressive way, when it comes to policy, and also in the United Nations. If fact, they were the one nation that almost didn't agree to the move to formal discussions [about AI in the military]. I think in the last moment they agreed to abstain, whereas pretty much all the other countries agreed.The media paints this Terminator-like vision of what autonomous warfare would mean. Is that what the worst case scenario looks like to you?

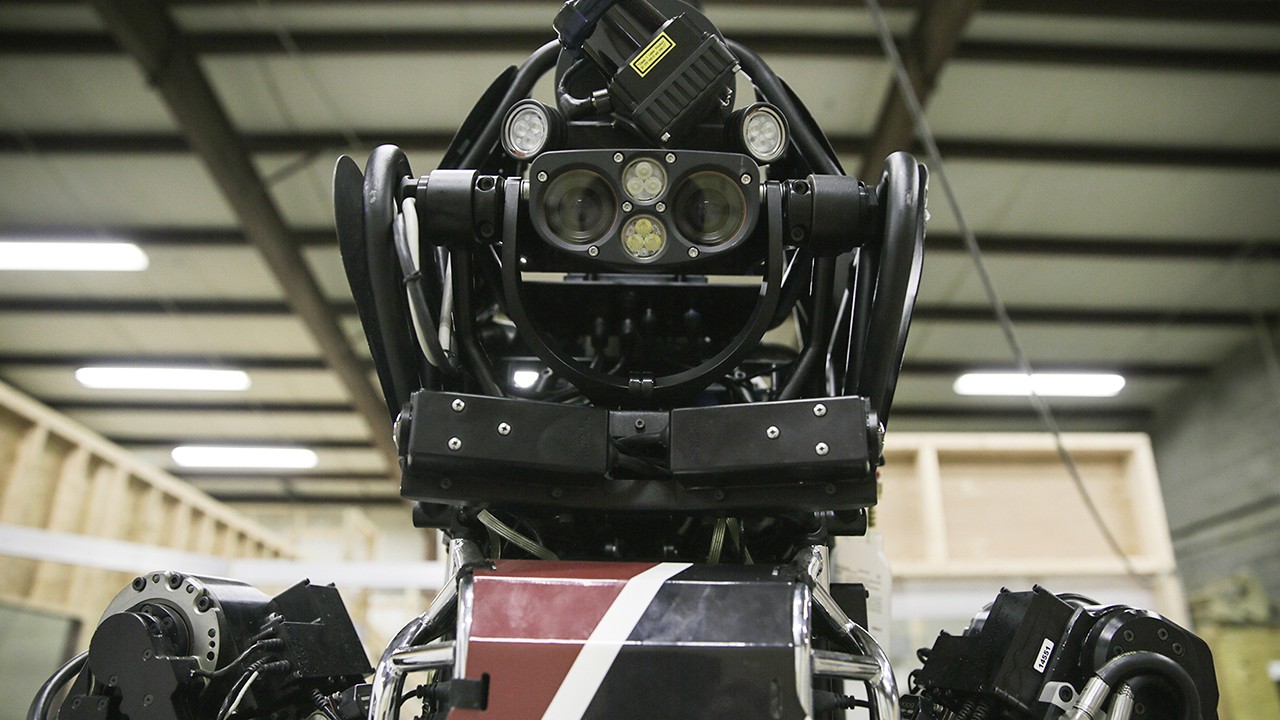

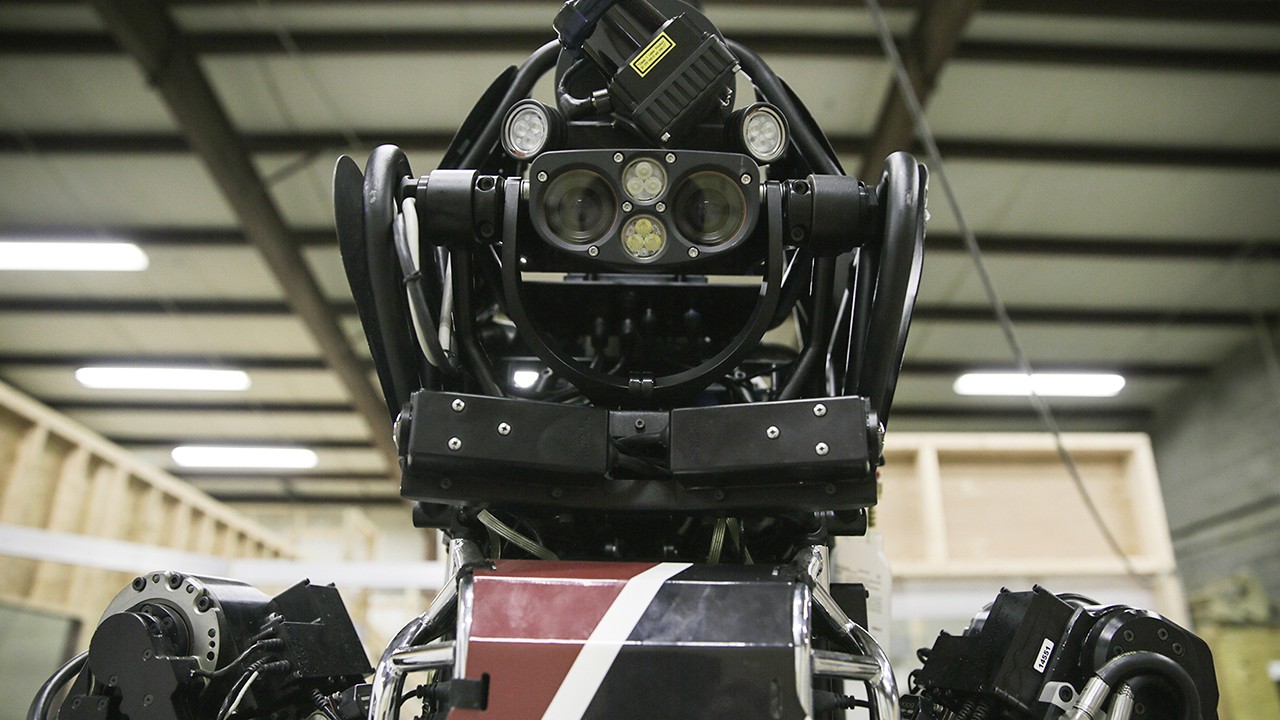

I mean Hollywood is actually pretty good at predicting the future. And if we don't do anything, then in 50 to 100 years time it will look much like Terminator. It wouldn't be that dissimilar to what Hollywood is painting… there will be a lot of risk well before then, in fact. The military has prototypes of many of these technologies already, which they will be fielding in the next few years if we don't do anything about it. And that's going to be—not smart AI—but stupid AI. What do you mean by stupid AI?

To begin with, it's going to be simply incompetent, without the malevolence you see in the Terminator. But, eventually, the technology will get better. And I've got to tell you, the sorts of technologies that the military are playing with at the moment, I'm very fearful. Especially in the battle field, which is a very confused place. In November last year, there were these… drones that the Americans were using—nine out of 10 of people that they were killing weren't the intended targets.Just while we're here, it's worth pointing out the US military has been historically the biggest funder of AI. We see, from their perspective, the great military advantage of being able to automate some of the things they do. There's no reason anyone should risk a life or a limb clearing a minefield. It's a perfectly good job for a robot. If anything goes wrong you blow up the robot, no one cares. Also getting supplies to contested territory, we shouldn't risk anyone's lives. So there's plenty of good things that they can actually do with AI to make people's lives safer. But, you know, giving machines the right to make life decisions… there are lots of technical reasons why I'd say that's a bad idea, before we even get on the moral and ethical reservations.Plus, I guess a huge amount of jobs are going to be lost with automation and AI.

There's a huge amount of uncertainty about what the future holds. There are clearly jobs that will disappear, we know that for sure. In September, Uber started trials with autonomous cars in Pittsburgh and now in San Francisco. If you're an Uber driver you should realise your job is going to be very short lived. Some irony there, this is one of the newest jobs on the planet, and it's probably going to be one of the shortest lived. What's the upside here? I sometimes wonder if we even actually want robots.

You know, it's hard to imagine the other benefits. But for example 1,000 people die on the roads in Australia each year, and that would go down to zero. It's going to transform our cities and open them up, we won't have all this poverty. We won't have all this traffic. We won't be wasting our time being a driver, which is a reductive thing to do. For most of us there will be a great benefit.Will my job be threatened?

There are still some things that machines are bad at and I suspect will be bad at for quite a while. Machines aren't as creative as us. So all the creative jobs will stay for quite a while.There is a robot writing screenplays.

There are, yes. Machines have very poor emotional intelligence though. All the human phase of things, again, they are likely to stay. And I'd like to point out to people that, you know, one of the safest jobs is actually one of the oldest jobs on the planet. …Sex worker?

Being a carpenter. Oh yeah, of course.

We're going to value, increasingly, things made by the human hand. Things that are mass produced, the cost of those will plummet because they're made by machines that can work 24/7… [but] the things that are scarce, things that are made by our expensive human hands, will be the things that we value most. You can already see this in the music industry. Digital didn't destroy the music industry like some people feared it might. It's shifted back, ironically, to be much more old-fashioned. We're back to experiences and live shows to make money, back to things that are more analogue than digital. So, I see a lot of opportunity. So would you say you're mostly "pro-robot" just not killer soldier robots?

There's some feeling that the future is inevitable, and it's not. The future is made by the choices we make today. If we do nothing, I suspect the future is actually going to be a rather dismal one. The rich will get richer and the rest of us will fall behind. There'll be a large number of unemployed people, living in poverty and in contempt of society. We need to make sure that all of us benefit from the prosperity and wealth that the machines will generate. But that requires us to make some interesting choices, and I think it requires some brave and bold politicians, and I don't see many of those around. Follow Maddison on Twitter

Advertisement

Dr Toby Walsh: I must admit I'm a bit of an accidental activist. I didn't really realise quite how politically involved something like this would become. My colleagues and I were talking about the issue [of AI in the military], and the way the military is starting to develop these sorts of technologies. It was clear to us what the end point was going to be. We wanted to raise a warning flag about it.A lot of very high profile people signed your letter calling for a ban on AI in the military.

I think what was interesting was not that it was signed by some well known people, like Stephen Hawking… but that it was signed by many of my colleagues. I don't think many people realise the AI community is not that big and the letter now has over 20,000 signatures. So that's many of the people—or a good fraction—of the people working now who were very happy to sign that.What's the AI community so worried about? I feel like the military has always played a pretty central role in developing new technology.

Lots of my colleagues said… "We don't want all the good from our technology to be tainted by the military using it for bad." Like any technology, AI is not good or bad. We get to make that choice. It certainly would be a very bad choice today, the technologies the military are developing will be making lots of mistakes. In the future, as it gets more sophisticated, then it's going to be revolutionising the speed and efficacy in which we fight war.

Advertisement

It's been called the third revolution of warfare. The first revolution being the invention of gunpowder by the Chinese, the second revolution being the invention of atomic bombs, and AI will be another step. It will destabilise the planet, which is already pretty destabilised… Just like with the financial markets, where the algorithms have flash crashes, we may end up fighting wars because the machines got into some undesirable feedback loop where they will be operating at such rapid pace we won't be able to step in and intervene.Did anything actually come out of your letter? I know there can be these, sort of, flashes of media interest around open letters and then the interest goes away.

I've been invited now to speak three times at the United Nations… There are still lots of possible obstacles in the way.. The first time I talked to [them] it was clear that the problem was the Chinese. A lot of the problem is now the Russians.I feel like this isn't the first time I've heard that this week.

Russia is now been behaving in a lot more of an aggressive way, when it comes to policy, and also in the United Nations. If fact, they were the one nation that almost didn't agree to the move to formal discussions [about AI in the military]. I think in the last moment they agreed to abstain, whereas pretty much all the other countries agreed.

Advertisement

I mean Hollywood is actually pretty good at predicting the future. And if we don't do anything, then in 50 to 100 years time it will look much like Terminator. It wouldn't be that dissimilar to what Hollywood is painting… there will be a lot of risk well before then, in fact. The military has prototypes of many of these technologies already, which they will be fielding in the next few years if we don't do anything about it. And that's going to be—not smart AI—but stupid AI. What do you mean by stupid AI?

To begin with, it's going to be simply incompetent, without the malevolence you see in the Terminator. But, eventually, the technology will get better. And I've got to tell you, the sorts of technologies that the military are playing with at the moment, I'm very fearful. Especially in the battle field, which is a very confused place. In November last year, there were these… drones that the Americans were using—nine out of 10 of people that they were killing weren't the intended targets.Just while we're here, it's worth pointing out the US military has been historically the biggest funder of AI. We see, from their perspective, the great military advantage of being able to automate some of the things they do. There's no reason anyone should risk a life or a limb clearing a minefield. It's a perfectly good job for a robot. If anything goes wrong you blow up the robot, no one cares. Also getting supplies to contested territory, we shouldn't risk anyone's lives. So there's plenty of good things that they can actually do with AI to make people's lives safer. But, you know, giving machines the right to make life decisions… there are lots of technical reasons why I'd say that's a bad idea, before we even get on the moral and ethical reservations.Plus, I guess a huge amount of jobs are going to be lost with automation and AI.

There's a huge amount of uncertainty about what the future holds. There are clearly jobs that will disappear, we know that for sure. In September, Uber started trials with autonomous cars in Pittsburgh and now in San Francisco. If you're an Uber driver you should realise your job is going to be very short lived. Some irony there, this is one of the newest jobs on the planet, and it's probably going to be one of the shortest lived. What's the upside here? I sometimes wonder if we even actually want robots.

You know, it's hard to imagine the other benefits. But for example 1,000 people die on the roads in Australia each year, and that would go down to zero. It's going to transform our cities and open them up, we won't have all this poverty. We won't have all this traffic. We won't be wasting our time being a driver, which is a reductive thing to do. For most of us there will be a great benefit.Will my job be threatened?

There are still some things that machines are bad at and I suspect will be bad at for quite a while. Machines aren't as creative as us. So all the creative jobs will stay for quite a while.There is a robot writing screenplays.

There are, yes. Machines have very poor emotional intelligence though. All the human phase of things, again, they are likely to stay. And I'd like to point out to people that, you know, one of the safest jobs is actually one of the oldest jobs on the planet. …Sex worker?

Being a carpenter. Oh yeah, of course.

We're going to value, increasingly, things made by the human hand. Things that are mass produced, the cost of those will plummet because they're made by machines that can work 24/7… [but] the things that are scarce, things that are made by our expensive human hands, will be the things that we value most. You can already see this in the music industry. Digital didn't destroy the music industry like some people feared it might. It's shifted back, ironically, to be much more old-fashioned. We're back to experiences and live shows to make money, back to things that are more analogue than digital. So, I see a lot of opportunity. So would you say you're mostly "pro-robot" just not killer soldier robots?

There's some feeling that the future is inevitable, and it's not. The future is made by the choices we make today. If we do nothing, I suspect the future is actually going to be a rather dismal one. The rich will get richer and the rest of us will fall behind. There'll be a large number of unemployed people, living in poverty and in contempt of society. We need to make sure that all of us benefit from the prosperity and wealth that the machines will generate. But that requires us to make some interesting choices, and I think it requires some brave and bold politicians, and I don't see many of those around. Follow Maddison on Twitter