John Moore | Getty Images

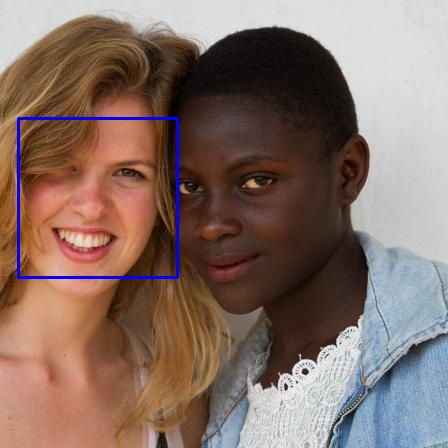

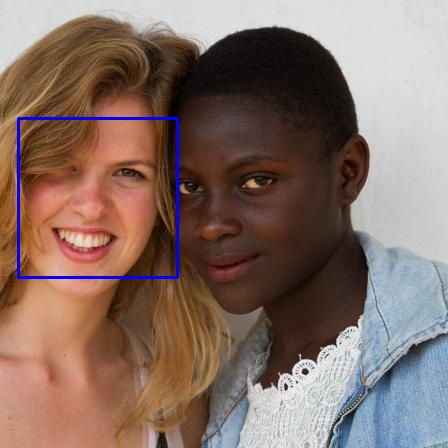

Students of color have long complained that the facial detection algorithms Proctorio and other exam surveillance companies use fail to recognize their faces, making it difficult if not impossible to take high-stakes tests.Now, a software researcher, who also happens to be a college student at a school that uses Proctorio, says she can prove the Proctorio software is using a facial detection model that fails to recognize Black faces more than 50 percent of the time.“I decided to look into it because [Proctorio has] claimed to have heard of ‘fewer than five’ instances where there were issues with face recognition due to race,” Lucy Satheesan, the researcher, told Motherboard. “I knew that from anecdotes to be unlikely … so I set out to find some more conclusive proof and I think I’m fairly certain I did.” Satheesan recently published her findings in a series of blog posts. In them, she describes how she analyzed the code behind Proctorio’s extension for the Chrome web browser and found that the file names associated with the tool’s facial detection function were identical to those published by OpenCV, an open-source computer vision software library.Satheesan demonstrated for Motherboard that the facial detection algorithms embedded in Proctorio’s tool performed identically to the OpenCV models when tested on the same set of faces. Motherboard also consulted a security researcher who validated Satheesan’s findings and was able to recreate her analysis. On its website, Proctorio claims that it uses “proprietary facial detection” technology. It also says that it uses OpenCV products, although not which products or what for. When Motherboard asked the company whether it uses OpenCV’s models for facial recognition, Meredith Shadle, a spokesperson for Proctorio, did not answer directly. Instead, she sent a link to Proctorio’s licenses page, which includes a license for OpenCV.“While the public reports don’t accurately capture how our technology works in full, we appreciate that the analyses confirm that Proctorio uses face detection (rather than facial recognition),” Shadle wrote to Motherboard in an email.Proctorio also did not answer several questions about the technology, including whether or not the company had fine-tuned the OpenCV models for use in its software.The failure of computer vision systems to detect and correctly identify darker-skinned faces is a well-documented issue, and Proctorio’s use of OpenCV’s models has severe ramifications for the students forced to use it. Facial recognition and detection software that have been built off of OpenCV have previously been found to be biased.Satheesan tested the models against images containing nearly 11,000 faces from the FairFaces dataset, a library of images curated to contain labeled images representative of multiple ethnicities and races. The models failed to detect faces in images labeled as including Black faces 57 percent of the time. Some of the failures were glaring: the algorithms detected a white face, but not a Black face posed in a near-identical position, in the same image. The pass rates for other groups were better, but still far from state-of-the-art. The models Satheesan tested failed to detect faces in 41 percent of images containing Middle Eastern faces, 40 percent of those containing white faces, 37 percent containing East Asian faces, 35 percent containing Southeast Asian or Indian faces, and 33 percent containing Latinx faces.Black students have described how frustrating and anxiety-inducing Proctorio’s poor facial detection system is. Some say the software fails to recognize them every time they take a test. Others fear that their tests will abruptly close and lock them out if they move out of perfect lighting.“There’s no reason I should have to collect all the light God has to offer, just for Proctorio to pretend my face is still undetectable,” one student wrote on Twitter.Satheesan said her findings help demonstrate the fundamental flaw of proctoring software: that the tools, ostensibly built for educational purposes, actually undermine education—particularly for already disadvantaged students.“They use biased algorithms, they add stress to a stressful process … during a stressful time, they dehumanize students,” she said.

The pass rates for other groups were better, but still far from state-of-the-art. The models Satheesan tested failed to detect faces in 41 percent of images containing Middle Eastern faces, 40 percent of those containing white faces, 37 percent containing East Asian faces, 35 percent containing Southeast Asian or Indian faces, and 33 percent containing Latinx faces.Black students have described how frustrating and anxiety-inducing Proctorio’s poor facial detection system is. Some say the software fails to recognize them every time they take a test. Others fear that their tests will abruptly close and lock them out if they move out of perfect lighting.“There’s no reason I should have to collect all the light God has to offer, just for Proctorio to pretend my face is still undetectable,” one student wrote on Twitter.Satheesan said her findings help demonstrate the fundamental flaw of proctoring software: that the tools, ostensibly built for educational purposes, actually undermine education—particularly for already disadvantaged students.“They use biased algorithms, they add stress to a stressful process … during a stressful time, they dehumanize students,” she said.

Advertisement

Advertisement