This story appeared in the February Issue of VICE magazine. Click HERE to subscribe.

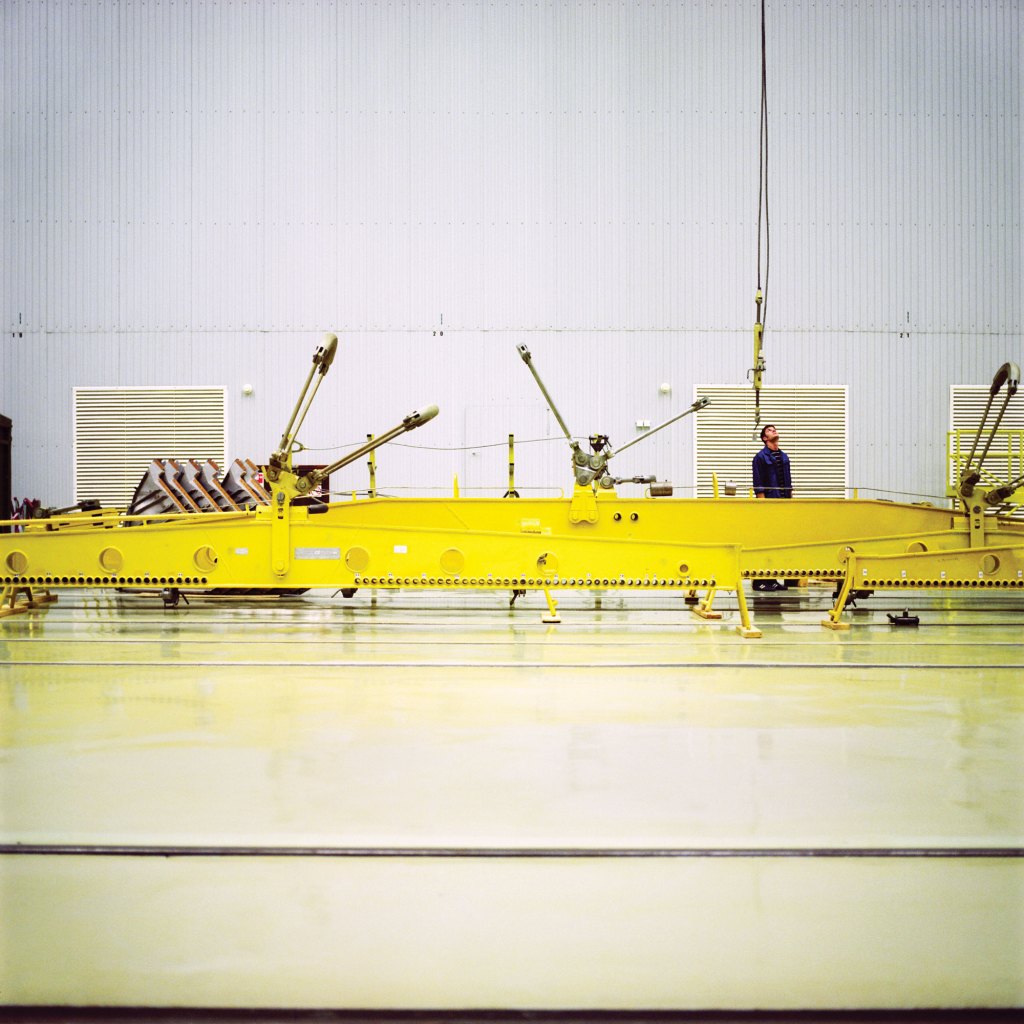

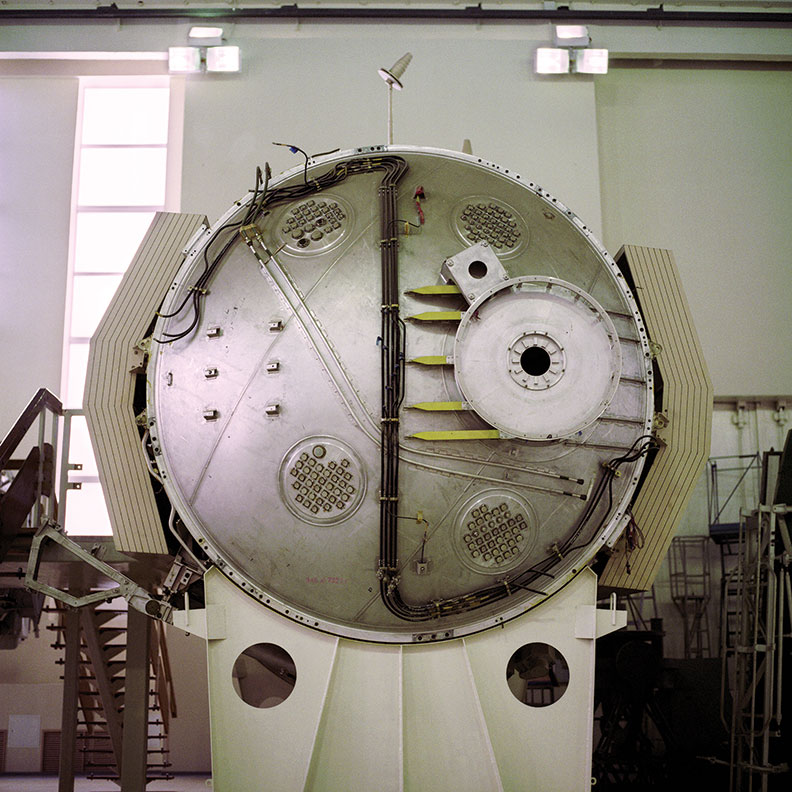

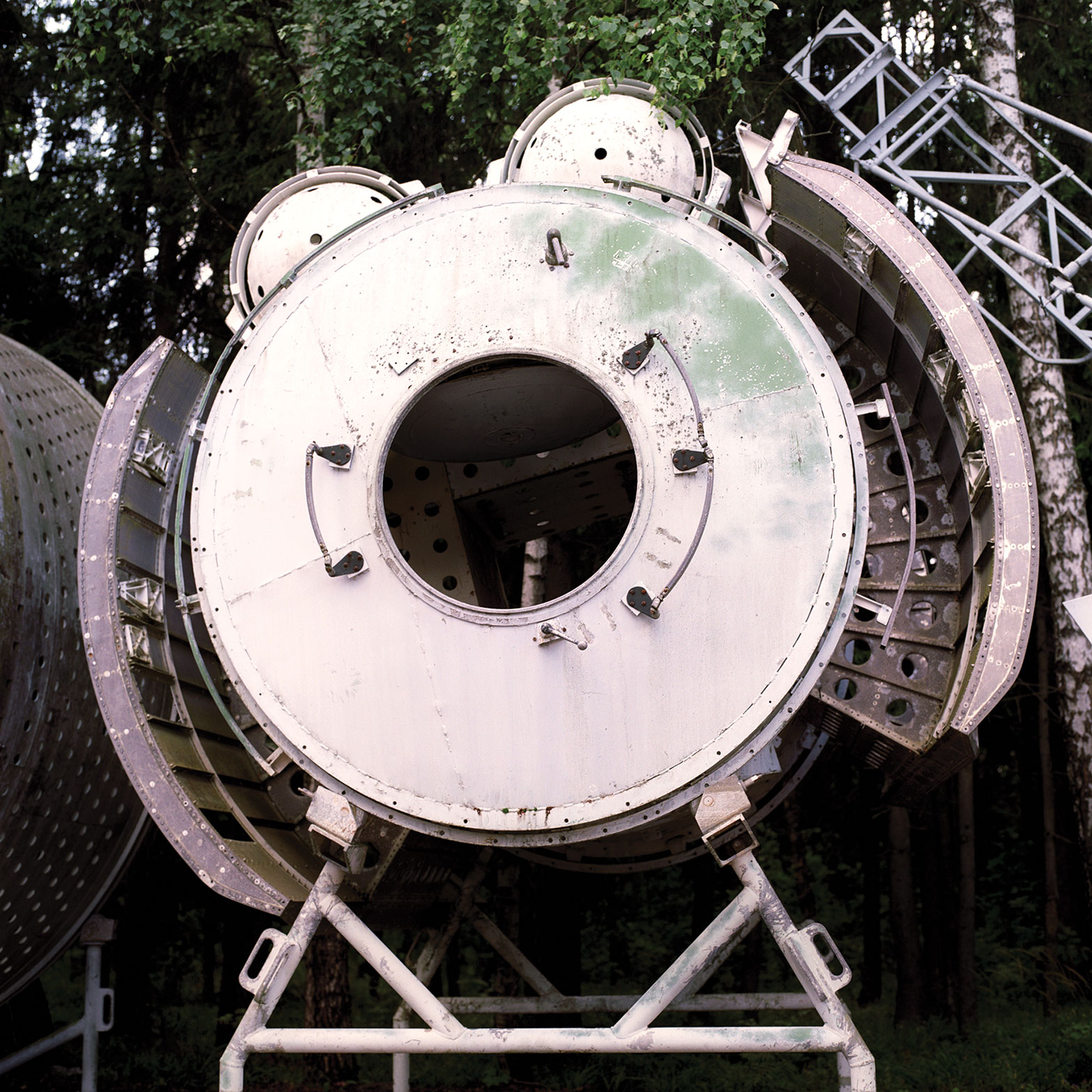

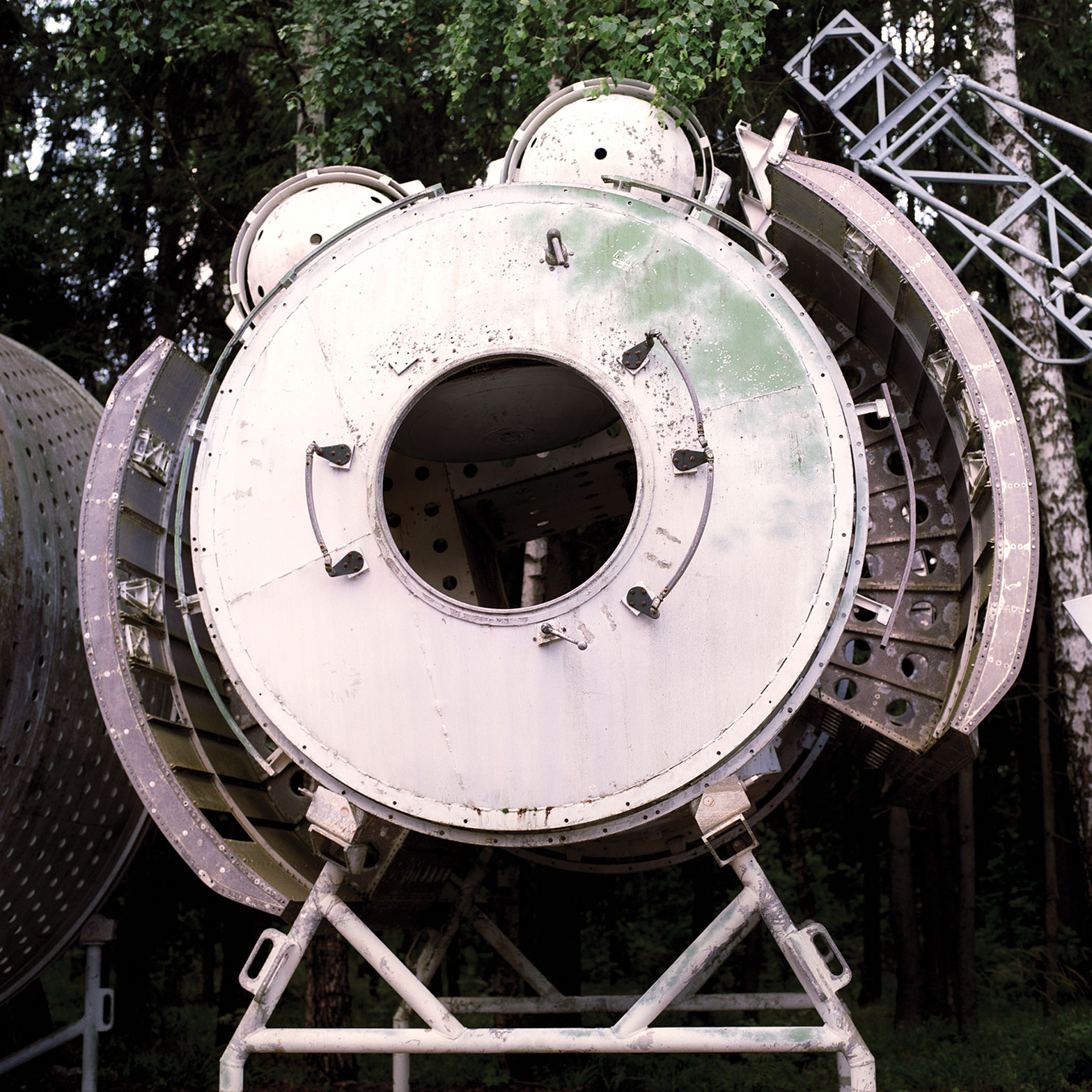

All photos by Maria Gruzdeva

Videos by VICE

1. Support Public Media, Off and Online

By Astra Taylor, co-founder of the Debt Collective and documentarian currently working on a film about democracy

Intellectuals I came of age admiring paid a lot of attention not just to media and culture, but to how they were financed. Frankfurt School theorists wrote about the culture industry drowning out democracy in service of commercial interests. Noam Chomsky demystified the process of “manufacturing consent,” painstakingly demonstrating the way even an ostensibly free press is unable to serve the public interest when beholden to the profit motive.

With the advent of networked technologies, these dynamics and dilemmas have only intensified, and yet we rarely talk about the problem of commercialism, perhaps because we are too marinated in it to see the problem clearly, let alone imagine a way out or an alternative system. Marketers have slapped their names on our museums, stadiums, schools, and beloved bands. They’ve colonized offline space; why should the internet be any different? But at least we can find some reprieve in “real life”—in a park or on a city street. In contrast, we spend almost every waking moment of our online lives (which basically means our lives) engaging with sites and services that are in thrall to advertisers—sites and services that are totally commercialized. Our virtual selves perpetually inhabit spaces that are more akin to shopping malls than public squares. What’s more, given the tendency toward monopoly online, we’re all basically hanging out in one mall—otherwise known as Facebook—while occasionally venturing off to a handful of other globally popular destinations (Google, YouTube, Amazon, Twitter, Snapchat, and so on).

There are basically no truly public, non-commercial spaces on the internet. And this is a serious problem we haven’t faced up to.

Of course, we’re not totally passive. We use ad blockers and occasionally pay directly for “content” we really like (or can’t be bothered to find for free), but we also rarely consider radical alternatives. The answer to the question of how we can make technology work better for us is that we actually need to make it work for us, for once, instead of working for commercial interests. That means we, the people, have to begin footing the bill directly, instead of indirectly funneling our money through private companies that only fund media and culture in a roundabout fashion via advertising and marketing budgets, and always with strings attached.

The only solution to this madness is public media, which, contrary to right-wing fearmongering, doesn’t mean state-controlled.

Advertisers are unbelievably empowered online, and this power is in desperate need of a public, non-commercial counterbalance. As things stand, advertisers control the purse strings of practically all of the most visited websites (Wikipedia, the only nonprofit significant numbers of people use regularly, is the exception that proves the rule). Advertisers used to need publishers or television stations or billboards to reach an audience, but now they can follow individuals across the web and through their apps, tracking us to gather data about our proclivities and preferences and targeting us with personalized appeals. As the aphorism rightly states, “If you are not paying for the product, you are the product.”

As internet users, we often complain about the consequences of commercialism without acknowledging the root cause. We lament surveillance and the death of privacy, without looking at the incentives driving corporate-data collection. We talk about being addicted to or distracted by social media, but not the fact advertiser-driven sites and services need us to restlessly “engage” to boost the metrics that determine how much money is made. (Where Chomsky spoke about manufacturing consent, we now live in an era of manufactured compulsion—because our obsessive clicks, whether we “like” or hate whatever we’re looking at, directly translate into cash.)

Or take the recent controversy over “fake news.” On the one hand, we are seeing the unprecedented triumph of a presidential administration willing not just to countenance but also to amplify unbelievably destructive, baseless bullshit—and the emergence of an enormous audience hungry for memes and mashups that suit their confirmation biases, truth be damned. But fake news didn’t emerge, sui generis, out of the nightmare of the 2016 election or even out of the bowels of the internet. It is the logical conclusion of a monopolistic, profit-hungry, advertiser-driven economic model with roots that go back decades. The conditions that create the scourge of “fake news” online are also those that have driven respectable print newspapers to lay off reporters and close foreign bureaus, and that continue to make the National Enquirer a successful enterprise.

The only solution to this madness is public media, which, contrary to right-wing fearmongering, doesn’t mean state-controlled. It means online and offline journalism and culture paid for by the public and in the public interest. It means taking back the money and power we give to advertisers to shape culture to their private ends and investing that wealth—our wealth—ourselves, into things that really matter. The alternative is watching our democracy be strangled by corporate monopolies and clickbait. At least no one can say they weren’t warned.

2. Don’t Forget Our Values

By Ben Wizner, director of the ACLU’s Speech, Privacy, and Technology Project, and Edward Snowden’s lawyer

In the disoriented aftermath of the September 11 attacks, the writer and activist Wendell Berry predicted that the shock of that event would forever be associated with the end of the “unquestioning technological and economic optimism” that had marked prior decades. Surely we would be forced to recognize that our most prized innovations could be turned against us—that one of the singular inventions of 20th-century science could be transformed in an instant into a crude weapon of 21st-century mass murder. Surely, too, we would finally acknowledge that we could not “grow” or “innovate” our way out of every global challenge.

But Berry was quite wrong. If anything, the decade and a half that followed—bookended by the twin traumas of 9/11 and the ascension of Donald Trump—saw an acceleration of the trends that Berry deplored, with “innovation” cementing its status atop a new normative hierarchy, and technology extolled, in the words of Google’s Eric Schmidt, as the means “to fix all the world’s problems.” And this idolatry of innovation was not confined to Silicon Valley; it migrated to the heart of the surveillance state in the person of Keith Alexander, former director of the National Security Agency, a proud “geek” who enlisted a Hollywood set designer to build a command post modeled on the bridge of the Starship Enterprise, “complete with chrome panels, computer stations, a huge TV monitor on the forward wall, and doors that made a ‘whoosh’ sound when they slid open and closed.” As an unnamed government official told the journalist Shane Harris, “Alexander’s strategy [was] the same as Google’s: I need to get all of the data.” The immediate benefits of deploying a global surveillance dragnet and capturing communications on a mass scale might not be apparent, but Alexander shared the naïve optimism of big-data cheerleaders that the NSA’s surveillance time machine would only solve problems, not cause them.

How many of today’s entrepreneurs are imagining how their innovative products might be used against their own customers?

That optimism was too often accompanied by a fundamental disdain for law and politics, which were seen as messy obstacles rather than guarantors of shared values. The masters of the surveillance economy managed to exempt America’s tech sector from the standard consumer protections provided by other industrialized democracies by arguing successfully that it was “too early” for government regulation: It would stifle innovation. In the same breath, they told us that it was also “too late” for regulation: It would break the internet. Meanwhile, the high priests of the security state not only concealed their radical expansion of government surveillance from the public, but also affirmatively lied about it—while ensuring that legal challenges were shut down on secrecy grounds. In both sectors, there was little thought given to the possibility that we might one day hand the keys to these systems to malevolent actors with contempt for democratic norms.

One person who did try to warn us was Edward Snowden, who in 2013 presciently anticipated our current moment: “A new leader will be elected, they’ll find the switch, [they’ll] say that ‘because of the crisis, because of the dangers we face in the world, some new and unpredicted threat, we need more authority, we need more power.’” Snowden worried that a leader with authoritarian tendencies, perhaps emboldened by public reaction to terrorist attacks, would turn the government’s colossal surveillance systems inward, against its own minorities or dissidents—a nightmare scenario that seems a lot less far-fetched today.

Snowden’s message—that it’s myopic to consider only what technology can do for us, and not what it might just as easily do against us—will be a vital one in the Trump era. How many of today’s entrepreneurs are imagining how their innovative products might be used against their own customers? The Dutch authorities certainly never contemplated that Nazi occupiers would use the country’s national registration to target Jewish citizens for genocide—but we, having seen all too many examples of aggregated data being used for persecution rather than progress, will not be able to plead ignorance.

The democratic stress test of a Trump presidency should be the wake-up call that Wendell Berry believed we had heard 15 years ago. Technologists who have grown used to saying that they have no interest in politics will realize, I hope, that politics is very interested in them—and that if we allow our world to be shaped by our innovations without also being constrained by our values, we just might end up handing a loaded gun to a madman.

3. Rethink Our Governing Ideals So We Can Reconnect with Natural Systems

By Daniel Pinchbeck, author of How Soon Is Now?

The recent film Arrival provides an excellent metaphor for our current approach to technology. In the film, the humans can’t understand that the futuristic technology the alien “heptapods” are offering humanity is not a new weapon or energy device, but their language, which reframes and restructures the way one understands the nature of time and existence. For the most part, in the real world, we have based our technology on a model of domination and control of nature, rather than seeking symbiosis learning from and copying nature’s no-waste processes.

As our technologies become more powerful, they increasingly separate us from the natural world and give us the false belief that we are somehow outside nature or beyond its control. This has led to a new, almost religious faith in the “singularity,” the threshold when humans merge with machines, or when artificial intelligence becomes conscious and able to direct its own development beyond the control of human agency (a prospect that many scientists find frightening). Unfortunately, in our euphoria over our mechanical powers, we tend to forget the destructive impacts they have on the diversity of human cultures and Earth’s ecology, of which we are a part.

Humanity is currently confronting an ecological mega crisis that some scientists say could lead to our extinction as a species in the next century or two. We are losing 10 percent or more of Earth’s remaining biodiversity every ten to 15 years as global warming accelerates. We are triggering positive feedback loops that may be irreversible. It is our success as a technological species that is causing this onslaught of destruction.

As theorists such as Paul Kingsnorth of the Dark Mountain Project have noted, we are in a “progress trap,” where each new layer of technology unleashes a cascade of deepening ecological problems, which then require new innovations to forestall their worst effects. For instance, plastics and chlorinated pesticides have concentrated in the food chain, causing cancers and other maladies. Billions of dollars are now spent on complex new cancer treatments, rather than ending industrial practices that have proved to be destructive.

In my book, How Soon Is Now?, I propose that we need to think about all of the current crises confronting our human community from a systems perspective and develop a systemic solution, based on a new worldview. We need to evolve our technologies—industrial, agricultural, and social—but we can only do this properly when we have reached a comprehensive understanding. We need a new myth or vision of our purpose on this planet.

To make our technologies work better for us, we are going to have to turn our attention away from distractions and dominating nature and collectively focus on the ecological crisis. We could undertake, on a global scale, a mobilization like the effort made by the United States after Pearl Harbor, during which the US transformed its factories for wartime production. Taxes on the wealthy were raised to as much as 94 percent. Similarly, today, we could transform the energy system and switch to renewables within a decade, if this became our focus. We also need to transform food-production technologies away from industrial agriculture toward regenerative-farming practices that rebuild topsoil. At the same time, we must change factory production to cradle-to-cradle practices that do not cause further damage to our environment.

All of this—and more—is technically possible, but our current beliefs and social systems keep us from accomplishing it.

To address the changes we need to make to our industrial and agricultural system globally, we also need new social technologies—new ways of making decisions together, and new ways of exchanging value. The internet can provide the basis for this—but at the moment, corporate interests have thwarted its original liberating promise. Just as Arrival dramatizes, we are in a race against time to develop technologies of cooperation and communication that supersede our current operating system, based on domination, fossil fuels, nuclear weapons, debt-based currency, and winner-take-all competition.

4. Start with Tech Companies

By Dr. Hany Farid, professor and chair of the Computer Science Department at Dartmouth College and a senior adviser at the Counter Extremism Project

Online platforms today are being used in deplorably diverse ways: recruiting and radicalizing terrorists, buying and selling illegal weapons and underage sex workers, cyberbullying and cyberstalking, revenge porn, theft of personal and financial data, propagating fake and hateful news, and much more. Technology companies have been and continue to be frustratingly slow in responding to these very real threats with very real consequences.

The question we must answer, therefore, is not only how we can make technology work better for us, but also how we can make technology companies work better for us.

Technology companies, and in particular social media companies, have to wake up to the fact that their platforms are being used for illegal activities with, at times, devastating consequences. These companies need to take more seriously their responsibility for the actions being carried out on their platforms. And these multibillion-dollar companies need to harness their power to more aggressively pursue solutions.

The current wave of troubling online behavior is not new. In the early 2000s, in response to the growing and disturbing abuse of young children, the Department of Justice convened executives from the top technology companies to ask why they are not eliminating child predators from their platforms. These companies claimed that a range of technological, privacy, legal, and economic challenges was preventing them from acting.

Over the next five years, the Department of Justice continued to pressure the industry to act. In response, the industry did nothing.

Between 2008 and 2010, working in collaboration with the National Center for Missing & Exploited Children and Microsoft, I co-developed a technology called photoDNA that allows technology companies to detect, remove, and report material associated with child exploitation. Once developed and freely licensed, it became increasingly more difficult for technology companies to justify inaction. Sustained pressure from the media and the public eventually led to the worldwide deployment of photoDNA. Today, virtually all the major technology companies around the world have adopted this technology. This technology is responsible for removing illegal content worldwide without any impact on the core mission of the participating technology companies.

Technology companies should be motivated to act not just for the social good but also for their own good.

The broader point is that technology companies—with the exception of Microsoft—were less than forthcoming in their assessment of their ability to remove this horrific content and in the impact that it might have on their business. Despite this lesson, we continue to hear the same excuses when technology companies are asked to rein in the abuses on their platforms. Most notably, in the fight against online extremism, technology companies have been largely indifferent to the fact that their platforms are being used to recruit, radicalize, and glorify extremist violence (although major technology companies recently announced a proposal to act, they have not yet done so). This is particularly frustrating given the fact that technology similar to photoDNA could go a long way to finding and removing extremism-related content.

If technology can bring us self-driving cars, eye-popping virtual reality, and the internet at our fingertips, surely we can do more against everything from online extremism to fake news. The complexity of making difficult decisions on how to address these challenges should be debated. We should not, however, let this complexity be a convenient excuse for inaction.

Technology companies should be motivated to act not just for the social good but also for their own good. The public is, and will continue to grow, impatient with their intransigence and indifference to the harm that their technology is yielding. The fight is happening. And the time is now for technology companies to get into it, and win it, by taking their social responsibility more seriously.

5. Educate and Be Inclusive in the Cognitive Era

By Lexie Komisar, senior lead, IBM Digital Innovation Lab

One of the greatest catalysts for transformational change in the 21st century is artificial intelligence, which has the potential to remake industries ranging from healthcare, commerce, and education, to security and the Internet of Things. AI will also reshape our personal and professional lives, and it stands to make the world a safer, healthier, and more sustainable place.

At IBM, we think of AI as augmented intelligence—not artificial intelligence—because it works side by side with humans, helping us create, learn, make decisions, and think. Let’s consider how cognitive computing has come of age. With the rise of social media, video, and digital images, now 80 percent of the world’s data is “invisible” to computers. Cognitive computing takes such information and pulls out insights and recommendations, to help people make better decisions, because over time cognition can understand and learn. It stands to reason that our rapidly expanding abilities to make sense of the growing body of digital information will unlock the secrets to many of the world’s biggest mysteries and challenges.

IBM’s Watson may be the best-known example of cognitive computing, and it’s already making an impact on our lives, whether we know it or not. Watson makes your weather app more personalized, it helps brands understand what customers are saying on social media, and it assists oncologists looking for personalized treatments for patients. Medtronic, a medical-device maker, uses Watson to predict hypoglycemic episodes in diabetic patients nearly three hours before they happen, and other companies have employed the technology to mitigate the effects of climate change—like the startup OmniEarth, which has utilized it to combat drought.

Indeed, the way that millions of people live and work will be impacted by cognitive technologies; that number is estimated to be more than a billion by the end of 2017.

However, to use these tools to reshape our world for the better, AI and cognitive computing need to be as available to as many people as possible. To prepare for the future, we must meet rapidly expanding demand for AI and cognitive skills, which can drive accessibility as well as democratization of the technology.

To make that happen, organizations need to work to develop new ways to make technology education more accessible. For instance, at IBM, we have partnered with digital-learning companies like Codecademy and Udacity to build new courses for building AI skills.

There are other ways to make it easier for students and professionals to retool their careers around AI. It also involves being inclusive across recruitment, hiring, training, and career development. It means creating career opportunities for people with different backgrounds and qualifications—including people without four-year degrees. We call these “new-collar jobs.” Many new-collar jobs are in some of the technology industry’s fastest-growing fields, including data science, cloud, cybersecurity, and design.

We also have to rethink education itself. IBM has helped build a new educational model called P-TECH, which combines high school and community college for students, many who are from underserved communities. The P-TECH model—which will reach up to 80 schools nationwide—makes education more accessible and unleashes the potential of technology for good.

Whether it’s building AI skills for the next generation of technology, or reinventing education for the next-gen workforce, it’s important for technology companies to lead the way as we face both the unprecedented challenges and opportunities in our cognitive age.

6. Utilize Autonomous Vehicles to Rethink Mobility

By Karl Iagnemma, principal research scientist at MIT and CEO at nuTonomy

Technology is often a force for social change, but only sometimes a force for social progress. Consider one of the most important technological developments of the last century—the automobile. There’s no doubt that cars made personal transportation faster, cheaper, and more convenient. But the social legacy of the automobile is mixed. Tailpipe emissions pumped greenhouse gases into our atmosphere and warmed the climate. Highways tore through urban neighborhoods and contributed to sprawl. No one wants to go back to the days of horse-drawn carriages, but it’s worth asking if we could have deployed this technology more wisely.

Over the next several years, advances in autonomous vehicle (AV) technology will change society as dramatically as the automobile has. Even if we do nothing but trade in our personal cars for self-driving cars, our lives will change for the better. Since human error contributes to 94 percent of all automobile crashes, AVs will save many of the 38,000 lives lost to traffic accidents in the nation each year—and reduce the 1.2 million lives lost globally. Self-driving cars will bring down the cost of insurance, one of the major expenses of car ownership. We’ll also recover the time we currently spend driving to use as we wish. People who are unable to drive themselves—and that includes all of us, if we get old enough—will gain new freedom.

If we really want to unlock the social benefits of AVs, however, we must rethink our view of mobility. For most of us, mobility means commuting solo in our individually owned, gasoline-powered vehicles and leaving them parked for most of the day—just as we did in the 1950s. That model of mobility has been costly, to our wallets and our environment. Here’s how we should use AV technology to transform mobility.

First, we should rethink the model of personal car ownership. When you own a vehicle, you’re investing in a rapidly depreciating asset that will sit idle for most of the day. Until now, we’ve accepted this inefficiency out of convenience. AVs, however, will be efficient without sacrificing convenience. Hail a ride on an app, and an AV will pick you up within minutes. If you don’t mind sharing the ride with a fellow passenger, the app will coordinate your ride with others and save you all money. When you’ve arrived at your destination, that same vehicle will continue on its way, to transport other passengers or deliver parcels.

Next, as we move from individual vehicle ownership to use of shared AV fleets, we should transition from gasoline-powered to electric vehicles. One of the remaining obstacles to widespread adoption of electric vehicles is range anxiety. When we purchase a car, we want to know that we can take that 500-mile trip to our grandparents’ without worrying about finding a charging station—even if we only take that trip once per year. When companies purchase a fleet of AVs, however, they can cover most trips that their riders demand with electric vehicles and leave the yearly trip to grandma’s to a gasoline-powered rental car. Electric AV fleets will emit less carbon into the atmosphere and cause less damage to our environment.

Finally, as AVs transform mobility, we should rethink where we live and work. In the past decade, some Americans have returned to cities from the suburbs, and more might move back if rents were more affordable. AVs could help make cities affordable by reducing the cost of transportation and the cost of land. (This might seem counterintuitive. After all, if AVs reduce the cost per mile traveled, wouldn’t that make it cheaper to commute from farther away? That’s true in an individual-vehicle-ownership model, but not in a shared-fleet model.) The more passengers that an AV carries, and the fewer miles between each ride, the more revenue the AV generates and the more savings the company can pass on to consumers. Therefore, the cost per mile of riding an AV will be lower in dense urban areas. Additionally, AVs will reduce the need for parking, making valuable city land available for housing and office development.

Whether AVs simply adapt to our existing mobility model or transform society is up to us. Market forces will play a role, as insurance companies lower premiums for AVs and riders opt to share rides to save money. Public policy will also influence the future of mobility. Governments should encourage the development of AVs by permitting real-world autonomous testing, especially in urban areas. They should invest in infrastructure—such as electric-charging stations—that will expedite the transition to electric AVs. They should reform zoning rules that require developers to build parking, so valuable land can be used for housing and offices.

The development of self-driving cars gives us a chance to improve the social institutions and practices that we’ve built around the automobile. Let’s use AV technology to drive toward a safer, cleaner, and more efficient tomorrow.

7. Take Power Back

By Samy Kamkar, privacy and security researcher

Technology is dangerous. As you walk around, smartphone in pocket, it’s collecting identifying information about you and of every wireless network around you. Apps are running and engaging the microphone to listen for ambient sounds to build a targeted profile of you. Your laptop camera can be turned on without the record light displaying, allowing hackers and even the government to watch everything you do. Hackers can remotely control your vehicle—more computer than car—over the internet, the wheel and transmission entirely directed by their fingertips. This is not some dystopian future. This is technology today, and it has wonderfully terrifying command over your life, whether you know it or not. Rather than banishing electricity and living in the forest, with some basic knowledge, you can regain control.

Mobile devices are often the most fused into our lives, and the underlying apps can have some intriguing (read: devastating) powers. Apps running in the background can learn your PIN or see what you type by harnessing the incredible accuracy of the accelerometer and gyroscope in your device, measuring the physical movement of the phone as you tap each key. Even more amazingly, researchers have demonstrated that an app on your phone can listen to the ultrasound, inaudible to humans, that your computer’s processor emits when performing various functions. Each unique sound produced correlates to a specific operation, allowing an attacker to extract encryption keys, passwords, and even what you’re typing from your computer, using only your phone’s mic.

As technology creeps into other areas of our lives, we continue to see excellent examples of how its weaknesses can move from the extraction of secrets to bodily harm. Hundreds of thousands of people were left without power one cold, winter night in Ukraine when hackers gained access to and took down electrical breakers and prevented the actual employees at a power grid from turning them back on. And in 2015, security researchers used the same systems that allow for fancy features like adaptive cruise control and parking assist to take over a Jeep Cherokee’s brakes, steering, and transmission remotely over the internet.

Does your toaster need to be on the internet via your personal network at home? Does your car really need to send you email, and if it does, should it use your personal email account?

Although some abuses of technology can be frightening, the pros often outweigh the cons, and with some conscientious thought, we can make better decisions about how and what technology we integrate into our lives. A simple backup plan can make all the difference. If you have data that you find important to you, be it emails, pictures, or documents, then create your own backups. If your data is ever deleted from the cloud by mistake or by malice, you can be confident you have a local copy. In the case of a local disaster such as fire or theft, using an inexpensive cloud-based backup service is also sensible.

Thinking twice about app access will also keep you safer. When installing new mobile or tablet apps, or integrating with existing social networks, are they asking for peculiar permissions? Is there any reason to share your microphone with the app if it asks? Does providing your location to a website always make sense? Sometimes it does, but you need to decide whether you’re comfortable with sharing this information. If purchasing a new piece of tech, does it make sense to be internet enabled? Does your toaster need to be on the internet via your personal network at home? Does your car really need to send you email, and if it does, should it use your personal email account? Or why not set up a separate email account for devices other than your personal laptop and phone?

And the number one way to protect your data, accounts, and digital life is to use a different password for each account, and a password manager like LastPass or KeePass to manage the multiple passwords. When available, enable two-factor authentication on the services you use—this will prevent a stolen or hacked password from being used, as the attacker would also need access to your phone.

While technology weaves into your life, by being just a little bit more attentive, you can take back power and make tech work better for you.

8. Hold Technology Accountable to the Public

By Cathy O’Neil, author of Weapons of Math Destruction, former Wall Street quant, and founder of ORCAA, an algorithmic auditing company

Near the end of 2013, in Rick Snyder’s Michigan, the state’s unemployment insurance agency began using an artificial intelligence system called MIDAS, which stands for Michigan Integrated Data Automated System. Unemployment insurance claims would henceforth be automatically verified and scrutinized, resulting in 22,427 accusations of fraud over the next two years.

There was a problem, though. A recent review of the system found that MIDAS was wrong about fraud a whopping 93 percent of the time, representing more than 20,000 people falsely accused. Such people were subject to fines of up to $100,000 and tax garnishments, even if they’d never received any unemployment payments. Moreover, many people didn’t know how to appeal these decisions, due to the opaque nature of MIDAS, and many went into bankruptcy.

So far, $5.4 million has been repaid to fewer than 3,000 people, while Michigan’s unemployment agency’s contingency fund, which collected the fines mentioned above, has ballooned to $155 million from $3.1 in 2011—the MIDAS touch indeed. Some of that money has been earmarked to balance Michigan’s budget.

So, did MIDAS fail? Or, better yet, for whom did MIDAS fail? It clearly failed for those people falsely accused. But from the perspective of Michigan’s unemployment insurance agency, it might be seen as a success, if a Machiavellian one.

Let’s make technology work better for us by making the public an explicit stakeholder whose benefit or harm is taken into account.

Now to the more general question: How can we make technology work better for us? The answer, as above, depends on who we are.

The truth is, technology is already working great for those who own it. Facebook might be distorting our sense of reality and creating an uninformed, hyper-partisan citizenry with its automated feeds and trending topics, but it’s also making record profits. So anyone among the tiny group of Facebook co-founders, or the small group of Facebook stockholders, might say technology is working great. But if we represent the public at-large and are concerned with an informed citizenry, not so much.

We’ve spent too long welcoming technology with open arms and no backup plan. We especially deify big data and machine-learning algorithms because we believe in and are afraid of mathematics. We expect the algorithms and automated systems to be inherently fair and unbiased because—well, because they’re mathematically sophisticated and opaque. As a result of this blind faith, we seem more afraid of Facebook hiring biased human editors than of Facebook’s greedy algorithm destroying democracy.

It’s time to hold technology accountable to the public.

The obstacles to doing this are real. The complexity, the opacity, and the lack of an appeals system team up to make us feel the inevitability of automation. Facebook and Google don’t have customer-support numbers to call, just as Michigan didn’t have a mechanism to appeal a MIDAS fraud decision. Moreover, the harms are often diffuse—externalities that are nearly impossible to precisely quantify—whereas the profits are concentrated and very easily counted. Even so, we have enough examples of deep harm to know that secret, powerful, and unaccountable technological systems are damaging us.

But there’s good news. These are design decisions, not inevitabilities. In the MIDAS example, it could be reprogrammed to weigh a false accusation just as damaging as a fraud gone undetected, making it much more sensitive to that particular wrong, even at a slight loss of income in fines. Social media could be designed to promote civil discourse instead of trolling and could expose us to alternative views, instead of forcing us to live in echo chambers, even if it might mean our spending slightly less time shopping online.

Let’s make technology work better for us by making the public an explicit stakeholder whose benefit or harm is taken into account. We could stop getting better at facial recognition, online-tailored advertising, automated romantic partnering, and all other kinds of creepy predictive analytics for the next ten years and simply focus on what kind of moral standards we want our AI to subscribe to and promote, and we’d be better off as a society.

9. Empower Health-Data Stewards

By John Brownstein, PhD, epidemiologist, and chief innovation officer at Boston Children’s Hospital, professor at Harvard Medical School, and co-founder of the non-emergency medical transportation startup, Circulation

Healthcare is responsible for approximately 18 percent of US GDP expenditure, but even with the mandated movement to electronic medical records, patients, increasingly referred to as “healthcare consumers,” are demanding ownership and portability of their data. Yet paper and unstructured, unsharable, unidentifiable data sets are commonplace in a system demanding a deeper integration of research and clinical expertise to help solve the most difficult challenges of health and disease.

I’ve spent much of my research career thinking through unique ways to bypass the disconnected health data landscape and still produce insights on disease at a global scale. Today, these technologies are leveraging the exhaust of our very public and increasingly digital lives to construct detailed characterizations of individual and population health. Combined with our clinical data, the power to influence health becomes very personal and one where consumers of healthcare are demanding progress.

For the father of a sick child in France, the need for shared health data was the most personal of journeys. Early last year, this father took to Twitter desperately searching for someone to diagnose his ailing child—his tweet listing a number of genetic markers thought responsible for the disease. A physician at Boston Children’s Hospital noticed the post by happenstance, and within hours, a team of genetics experts and physicians was engaged. Within weeks, the patient’s whole exome was sent to Boston for interpretation and ultimately a diagnosis.

On its face, many will blame technical complexity for the slow movement toward integrated personal-health data sets, but interoperable standards are increasingly commonplace. In reality, the likely culprit lies in reimbursement models that lack incentives for reducing cost and increasing value delivered. It took a realization of new efficiencies and pressure to reduce risk, enabled by technology, for the banking industry to open frictionless data-sharing networks mediated by industry data stewards. In healthcare, the economic benefits of open sharing will need to pressure integration—and integration will create a new economy of health-data stewards trusted by the health consumer.

The solution to open sharing? We, healthcare consumers, must empower trusted data stewards to aggregate all aspects of our very personal health data. Achieving this level of trust will take work on all fronts: creating legislation that protects consumers, expanding reimbursement models that favor value, and building trusting relationships among healthcare providers and the companies entrusted with medical data. Only as these economic and social pressures grow will we see a future where data stewards enable patients to own and port their health information freely.

The best part of achieving a future of frictionless, open health-data sharing? Well, personalized medicine becomes a reality for everyone. Data sets previously thought unrelated to health become the early-warning indicators that prevent disease. Our environments begin acting intelligently to promote wellness, and detecting disease between doctors’ visits becomes the work of advanced, always-learning algorithms operating in the background.

This future is already being realized in isolated systems constructed to test the power of integrated health data. When our team from Boston Children’s Hospital and Merck began testing the relationship between insomnia and Twitter use, patterns emerged showing a relationship between Twitter usage and sleep patterns. Surprisingly, the trends observed were somewhat counterintuitive.

In diabetes management, researchers are already integrating passive data from wearable devices to understand the relationship between activity data and insulin dosing. And companies with remote patient-monitoring solutions are already integrating the first wave of consumer-device data sets so clinicians can begin seeing patients’ progress between visits in a more definitive way.

The aggregation of all aspects of personal health will enable a future where advanced machine learning and intelligence can be applied on a patient level. These experiments in data sharing are just the beginning of what’s already an evolved capacity for machine learning begging for integrated data at scale.

This next frontier in understanding and bettering human health is within sight, but a refocusing on the role of data stewards is needed to get us there.

More

From VICE

-

-

LOS ANGELES, CALIFORNIA – NOVEMBER 14: Timothée Chalamet seen at a Special Screening of A24's "Marty Supreme" at Academy Museum of Motion Pictures on November 14, 2025 in Los Angeles, California. (Photo by Eric Charbonneau/A24 via Getty Images) -

Photo: Gandee Vasan / Getty Images -

Photo: duncan1890 / Getty Images